Why HealthBench Is a Milestone -- But Not the Metric for Healthcare AI

.avif)

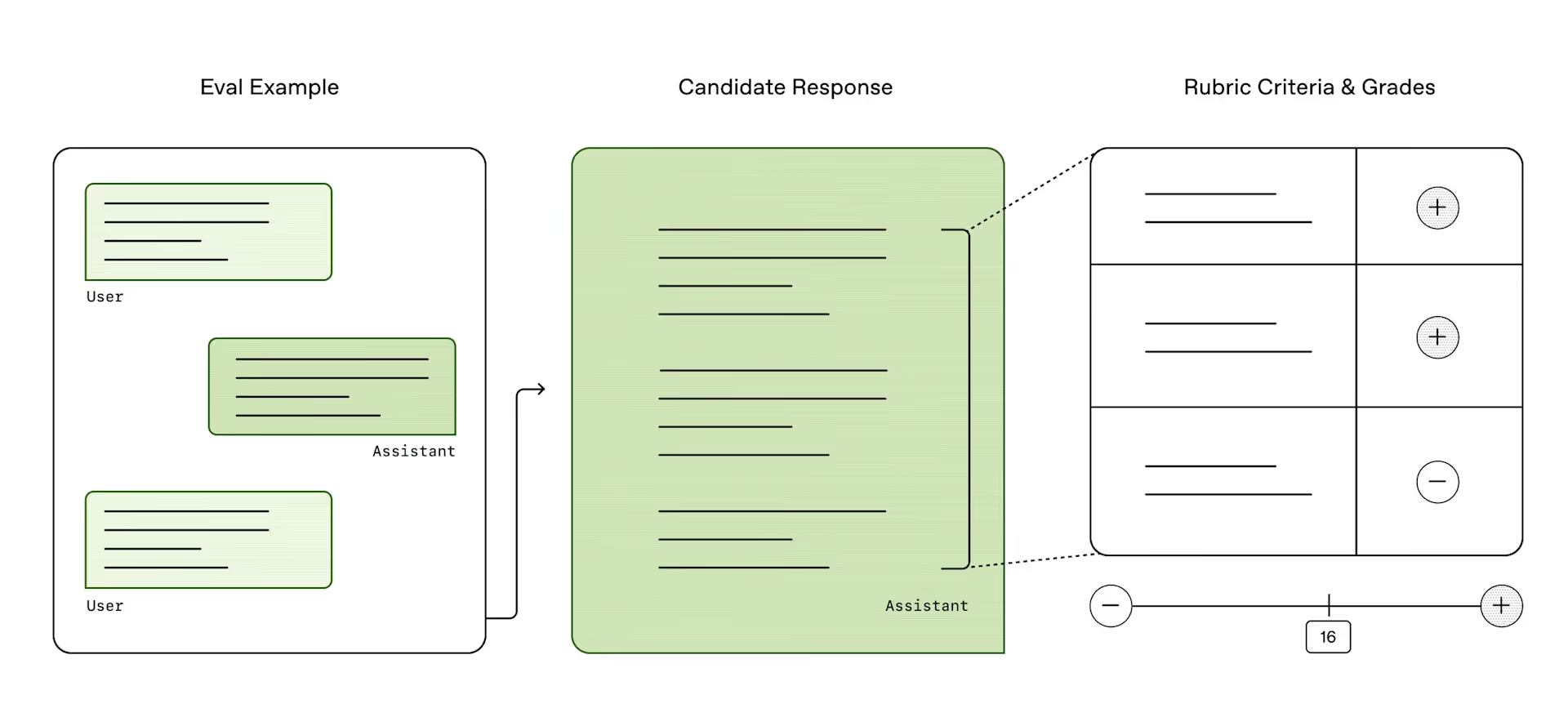

OpenAI's newly released HealthBench is an impressive step forward in evaluating the medical reasoning abilities of large language models (LLMs). It's the most comprehensive benchmark to date focused specifically on health-related conversations, with over 5,000 multi-turn, multilingual cases evaluated by physicians across 60 countries.

But despite its sophistication, HealthTech vendors should mostly ignore HealthBench when it comes to measuring the performance of real-world clinical AI systems.

Here's why.

What HealthBench Actually Measures

HealthBench tests the capabilities of general-purpose LLMs like GPT-4, Gemini, Claude, and Grok on health-focused Q&A scenarios. It focuses on:

- Factual accuracy

- Reasoning and completeness

- Contextual understanding

- Risk of harm and safety

Each case is scored using physician-authored rubrics - more than 48,000 criteria in total - making this one of the most robust open benchmarks in the LLM space.

Its core use case? Comparing how well frontier foundation models handle simulated medical conversations.

What It Doesn't Measure

For most HealthTech companies, particularly those focused on clinical operations, automation, or documentation, HealthBench misses the mark.

It doesn't test how AI performs in actual clinical systems. It doesn't measure whether your model assigns the correct ICD-10 codes, generates compliant SOAP notes, or correctly parses a referral fax. It doesn't evaluate documentation consistency across specialties or chart prep quality for pre-visit planning.

The majority of real-world healthcare AI today is not about answering patient questions - it's about reducing administrative burden. And that requires domain-specific, workflow-integrated, and highly contextual benchmarks.

If You're Building in Healthcare, You Need Internal Benchmarks

If you're developing clinical AI tools, your internal benchmark should reflect the actual tasks your system is performing. These should tie directly to the operational outcomes your clients care about.

For example:

- Did the AI correctly identify and populate the patient's active problem list?

- Did it capture HCC risk adjustment codes with accurate clinical justification?

- Did it generate a SOAP note that passed a physician's QA check with <5% edits?

- Did the claim created by your system pass payer validation with no downstream denials?

These are benchmarks you'll never find in HealthBench or other general-purpose LLM evaluations - but they're exactly what determines product success in clinical environments.

Once you've established these benchmarks, they need to become a core part of your dev lifecycle.

A Promising Direction: Industry-Standard Clinical Benchmarks

While internal benchmarking is essential, the industry also needs shared standards. That's where the Coalition for Health AI (CHAI) is doing promising work.

CHAI is developing:

- A lifecycle-based assurance framework for clinical AI

- A Model Card Registry for documenting model performance, fairness, and intended use

- Mechanisms for post-deployment monitoring across health systems

Their work is attempting to answer the hard question:What does "95% accuracy" actually mean in a real-world, regulated, high-stakes healthcare setting?

Final Takeaway

HealthBench is a strong academic and foundational benchmark - but it's not a deployment benchmark. If you're a HealthTech vendor building automation tools, documentation co-pilots, or clinical AI workflows, your metrics must live much closer to the ground.

That means:

- Domain-specific evaluation

- Tied to your product's purpose

- Owned by your team

- And run every time you touch the AI stack

Industry-wide efforts like CHAI are vital. But until they're mature and adopted, the best-performing HealthTech companies will be the ones who build and uphold their own rigorous standards.

.avif)

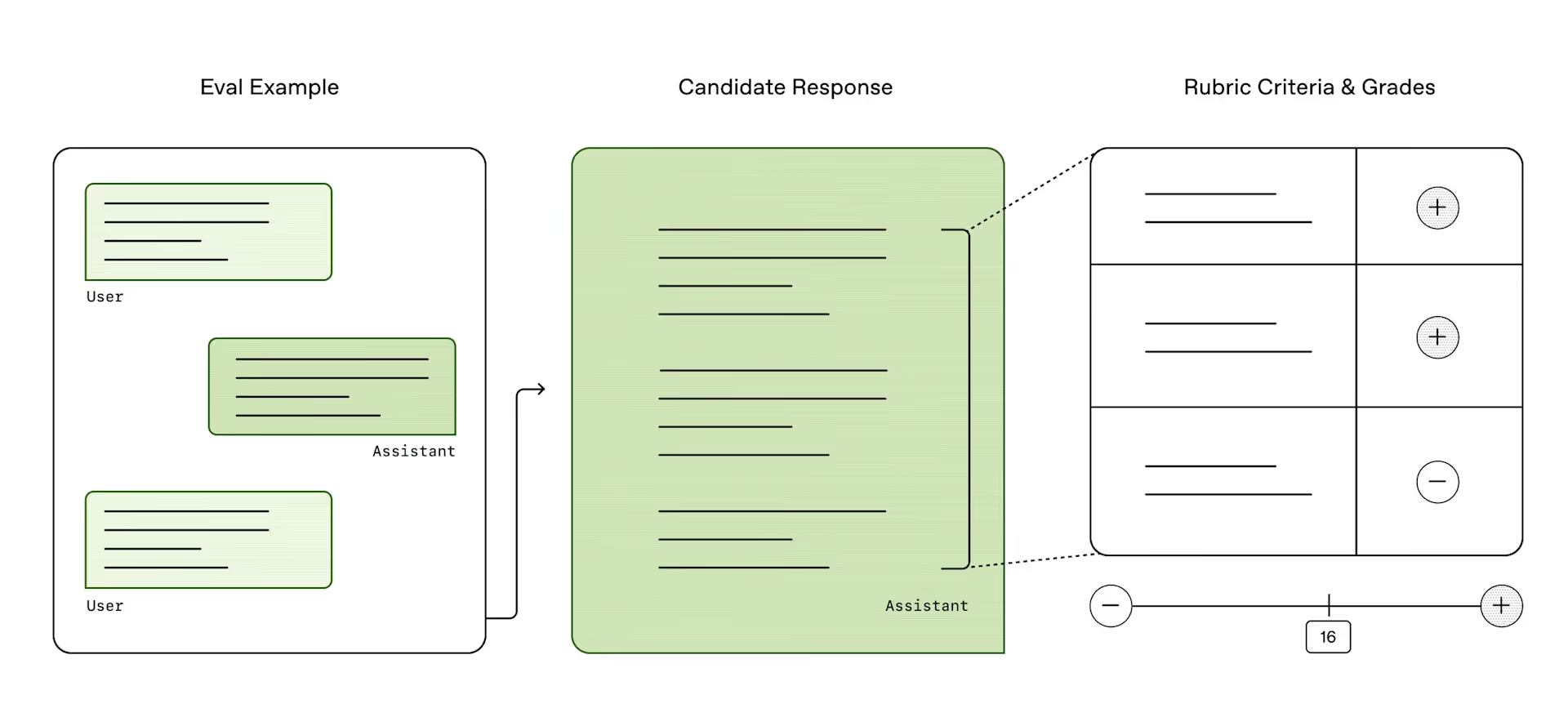

OpenAI's newly released HealthBench is an impressive step forward in evaluating the medical reasoning abilities of large language models (LLMs). It's the most comprehensive benchmark to date focused specifically on health-related conversations, with over 5,000 multi-turn, multilingual cases evaluated by physicians across 60 countries.

But despite its sophistication, HealthTech vendors should mostly ignore HealthBench when it comes to measuring the performance of real-world clinical AI systems.

Here's why.

What HealthBench Actually Measures

HealthBench tests the capabilities of general-purpose LLMs like GPT-4, Gemini, Claude, and Grok on health-focused Q&A scenarios. It focuses on:

- Factual accuracy

- Reasoning and completeness

- Contextual understanding

- Risk of harm and safety

Each case is scored using physician-authored rubrics - more than 48,000 criteria in total - making this one of the most robust open benchmarks in the LLM space.

Its core use case? Comparing how well frontier foundation models handle simulated medical conversations.

What It Doesn't Measure

For most HealthTech companies, particularly those focused on clinical operations, automation, or documentation, HealthBench misses the mark.

It doesn't test how AI performs in actual clinical systems. It doesn't measure whether your model assigns the correct ICD-10 codes, generates compliant SOAP notes, or correctly parses a referral fax. It doesn't evaluate documentation consistency across specialties or chart prep quality for pre-visit planning.

The majority of real-world healthcare AI today is not about answering patient questions - it's about reducing administrative burden. And that requires domain-specific, workflow-integrated, and highly contextual benchmarks.

If You're Building in Healthcare, You Need Internal Benchmarks

If you're developing clinical AI tools, your internal benchmark should reflect the actual tasks your system is performing. These should tie directly to the operational outcomes your clients care about.

For example:

- Did the AI correctly identify and populate the patient's active problem list?

- Did it capture HCC risk adjustment codes with accurate clinical justification?

- Did it generate a SOAP note that passed a physician's QA check with <5% edits?

- Did the claim created by your system pass payer validation with no downstream denials?

These are benchmarks you'll never find in HealthBench or other general-purpose LLM evaluations - but they're exactly what determines product success in clinical environments.

Once you've established these benchmarks, they need to become a core part of your dev lifecycle.

A Promising Direction: Industry-Standard Clinical Benchmarks

While internal benchmarking is essential, the industry also needs shared standards. That's where the Coalition for Health AI (CHAI) is doing promising work.

CHAI is developing:

- A lifecycle-based assurance framework for clinical AI

- A Model Card Registry for documenting model performance, fairness, and intended use

- Mechanisms for post-deployment monitoring across health systems

Their work is attempting to answer the hard question:What does "95% accuracy" actually mean in a real-world, regulated, high-stakes healthcare setting?

Final Takeaway

HealthBench is a strong academic and foundational benchmark - but it's not a deployment benchmark. If you're a HealthTech vendor building automation tools, documentation co-pilots, or clinical AI workflows, your metrics must live much closer to the ground.

That means:

- Domain-specific evaluation

- Tied to your product's purpose

- Owned by your team

- And run every time you touch the AI stack

Industry-wide efforts like CHAI are vital. But until they're mature and adopted, the best-performing HealthTech companies will be the ones who build and uphold their own rigorous standards.

James founded Invene with a 20-year plan to build the world's leasing partner for healthcare innovation. A Forbes Next 1000 honoree, James specializes in helping mid-market and enterprise healthcare companies build AI-driven solutions with measurable PnL impact. Under his leadership, Invene has worked with 20 of the Fortune 100, achieved 22 FDA clearances, and launched over 400 products for their clients. James is known for driving results at the intersection of technology, healthcare, and business.

Ready to Tackle Your Hardest Data and Product Challenges?

We can accelerate your goals and drive measurable results.