Synthetic Data in Healthcare: Strategic Implementation Guide for CTOs

Healthcare technology leaders face an impossible equation. Your AI team needs 10,000 patient records to build reliable clinical decision support systems, but you only have 500 records that meet your criteria. Meanwhile, each request for additional patient data triggers months of compliance reviews, and data breaches in healthcare now cost an average of $11 million – a 53% increase since 2020.

This data scarcity creates fundamental bottlenecks where innovation meets compliance. Synthetic data generation has emerged as both a potential solution and a hidden liability. While synthetic healthcare data can accelerate development without HIPAA restrictions, its application carries unique risks that can compromise patient safety when misapplied.

Why Synthetic Data Matters Now

Data Risks

Modern healthcare runs on data, but accessing real patient information creates enormous financial and legal risks. These costs extend beyond immediate breach response to include regulatory fines, legal fees, and long-term reputation damage.

From Months to Minutes: The Speed Advantage

Synthetic data offers a compelling alternative because it contains no actual patient identifiers, making it generally not considered Protected Health Information under HIPAA. This classification enables free use without patient consent or complex data use agreements, transforming development cycles from months to minutes.

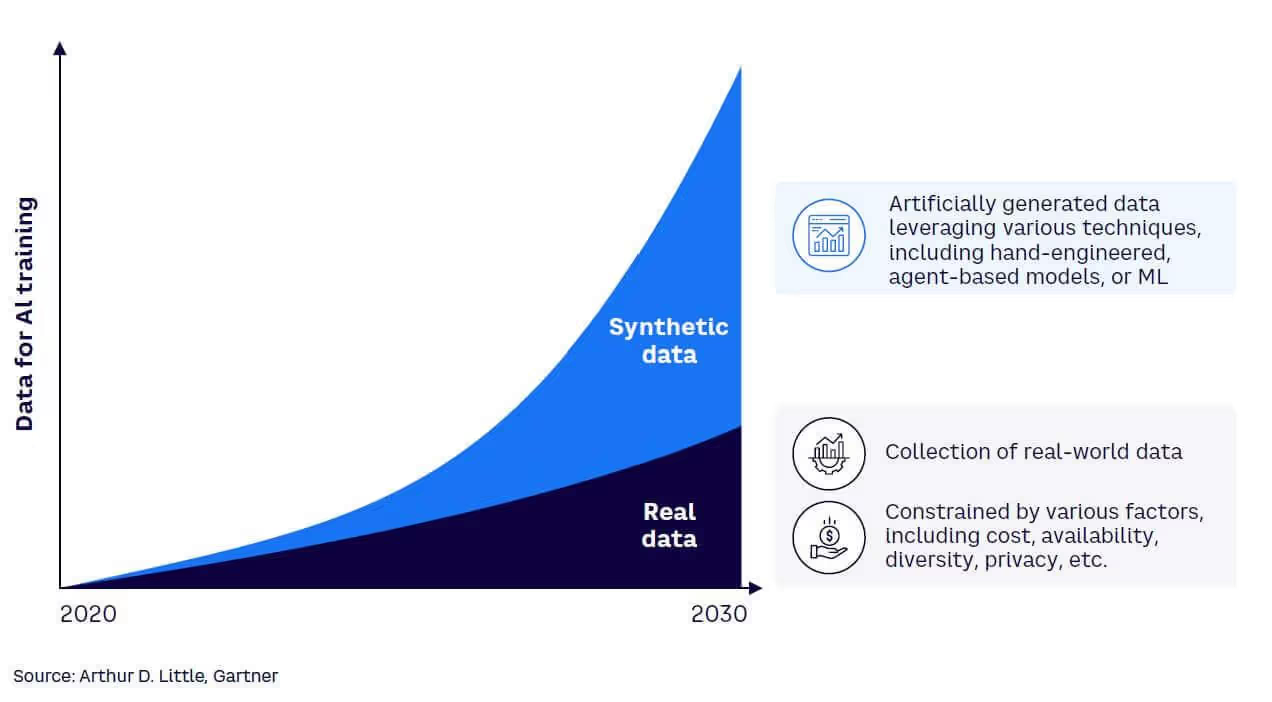

Gartner predicts that by 2030, synthetic data could completely replace real data in many AI training pipelines. Organizations like the Veterans Health Administration have already implemented synthetic data engines across 1,300 facilities, providing instant access to any patient cohort without lengthy approvals.

The Healthcare Data Dilemma Every CTO Faces

The "500 to 10,000" Problem

Healthcare organizations possess vast patient databases, yet accessing them for AI development proves nearly impossible. Statistical significance requires sample sizes that most healthcare datasets cannot provide while maintaining regulatory compliance. Your development teams understand this mathematical reality, but bridging the gap through traditional means often costs more than the AI project itself.

This data scarcity becomes particularly acute for rare conditions or specific patient populations. Training robust clinical decision support systems demands diverse datasets that reflect real-world complexity, yet acquiring such datasets through conventional channels creates insurmountable compliance overhead.

HIPAA Compliance Roadblocks

Every request for additional clinical data triggers comprehensive compliance reviews involving IRB approvals, privacy officer consultations, and data use agreements. Development cycles that should complete in weeks instead require quarters, pushing healthcare AI initiatives behind competitive timelines.

These compliance requirements exist for valid reasons, but they create operational paradoxes. Teams need iterative access to diverse datasets for model development, yet every iteration demands identical approval processes. The administrative overhead often exceeds the technical complexity of the AI development itself.

Why Conventional Data Acquisition Falls Short

Traditional approaches to healthcare data expansion face systematic limitations. External dataset purchases introduce integration challenges and often provide data that doesn't match specific organizational workflows or patient populations. Inter-institutional sharing requires legal frameworks that few organizations can navigate efficiently.

Quality consistency emerges as another barrier. Different healthcare systems employ varying documentation standards, coding practices, and clinical terminologies. Combining multiple datasets often introduces noise that reduces rather than improves model performance.

How Synthetic Data Works in Healthcare

Learning from Image Augmentation

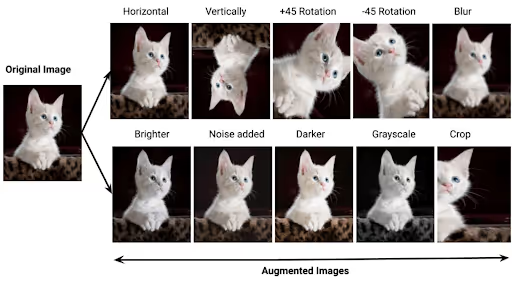

Computer vision provides familiar analogies for understanding synthetic data generation. Image recognition models routinely use transformation techniques like rotation, distortion, and warping to create training variations from existing images. These transformations teach models to recognize objects under various conditions without requiring comprehensive real-world data collection.

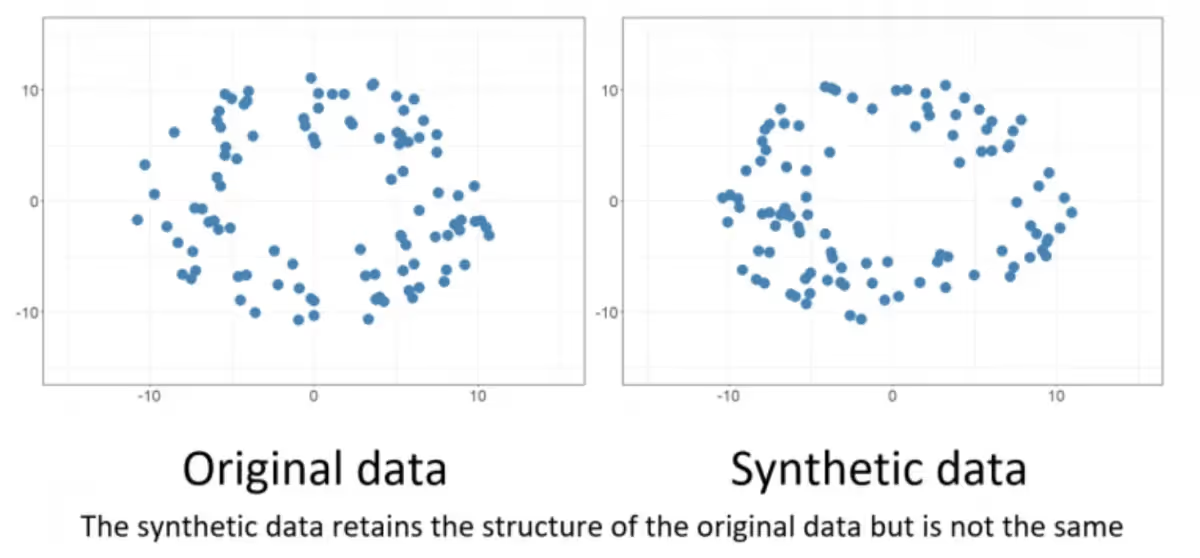

Similarly, generating clinical lookalike data should create variations of a healthcare dataset that preserve the underlying structure of that data. This is like the skewing, rotating, and translating the image of a tree doesn't replace that tree with a cat. When done correctly, synthetic patient records maintain the essential medical relationships and diagnostic patterns while introducing harmless variations in presentation, demographics, or documentation style.

This approach succeeds because essential object characteristics remain consistent across transformations. The fundamental features that define recognition targets persist while surface variations help models achieve robustness to environmental changes. In healthcare synthetic data, this means core clinical logic and statistical relationships should remain intact even as specific patient details vary.

However, this analogy also illuminates the critical risks ahead. When synthetic data generation fails, it's equivalent to that image transformation process accidentally replacing trees with cats. The output may appear statistically plausible but fundamentally misrepresents the clinical reality it's supposed to model. Understanding this distinction becomes crucial for recognizing the three major risk categories that can destroy healthcare AI projects when synthetic data ventures beyond safe variations into dangerous fabrications.

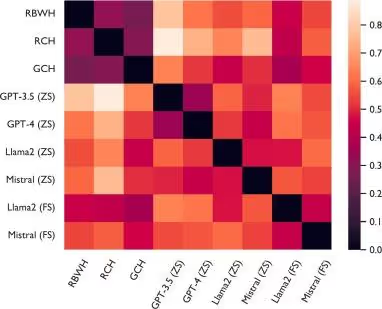

LLMs Generate Clinical Lookalikes

Healthcare synthetic data generation employs large language models trained on clinical text patterns. These systems analyze existing patient records and generate statistically similar clinical data that maintains diagnostic relationships while protecting individual privacy. Modern LLMs can produce synthetic clinical notes, diagnostic reports, and structured data elements that appear statistically indistinguishable from authentic patient records.

This capability theoretically solves scale problems by generating thousands of synthetic records from hundreds of real examples, providing the dataset sizes that machine learning demands without triggering compliance bottlenecks.

Three Critical Risk Categories That Destroy Healthcare AI Projects

Risk #1: Overfitting and Bias Reinforcement

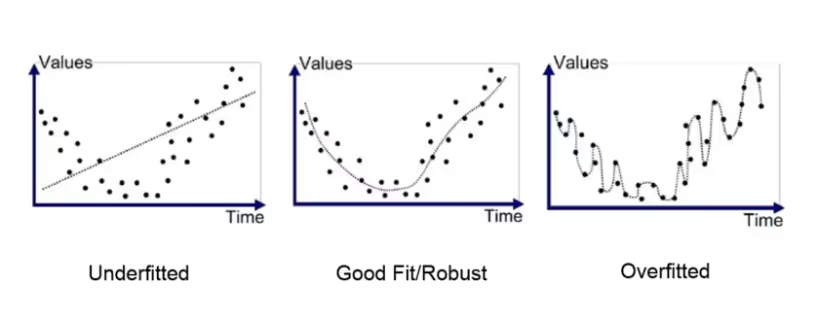

Synthetic data inherits and often amplifies the biases present in source datasets. If original patient records contain demographic skews, diagnostic blind spots, or systematic documentation errors, synthetic generation will magnify these problems across thousands of generated records.

This bias amplification becomes particularly dangerous in clinical applications. Models trained on biased synthetic data may systematically underperform for specific patient populations, creating disparate care quality and potentially harmful clinical recommendations. The statistical plausibility of synthetic data can mask these biases during development phases.

Identifying AI Artifacts in Clinical Data

The best way to detect artifacts in synthetic healthcare data is through validation against independent datasets. Real clinical notes are messy: abbreviations, inconsistent grammar, and irregular phrasing. Synthetic notes often look too clean. Statistical checks can reveal whether variation in the data matches what’s expected in actual patient records.

A familiar analogy is ChatGPT’s overuse of the em dash. It doesn’t change meaning, but it shows up far more often than in human writing. Healthcare data has similar “tells.” For example, large language models tend to over-generate certain ICD-10 codes because those codes dominate training data. The model assumes frequency means importance, even if it distorts the natural distribution of patient encounters.

Temporal patterns are another giveaway. Real patient data reflects irregular workflows and timing gaps. Synthetic data often smooths those over. Models trained on this “too consistent” data can fail when exposed to the unpredictable nature of real clinical practice.

Risk #2: The Circular Training Problem

The most insidious risk occurs when organizations use commercial AI systems to generate synthetic training data for similar AI applications. Using ChatGPT to create synthetic clinical notes, then fine-tuning GPT-5 on that synthetic data, creates feedback loops where AI systems train on their own outputs.

Model Collapse and Feedback Loops

Circular training leads to model collapse where diversity and clinical nuance disappear from generated outputs. Each generation cycle reinforces previous limitations, creating compounding distortions that move further from clinical reality. Models become increasingly confident about increasingly narrow interpretations of clinical scenarios.

This resembles information degradation in repeated transmission, but modern AI systems' statistical sophistication can mask this deterioration while systematically eliminating edge cases that clinical AI must handle reliably.

Risk #3: Missing Edge Cases in Patient Safety

Synthetic data excels at reproducing common patterns but systematically underrepresents rare conditions and unusual presentations that define safe clinical practice boundaries. These outliers often represent scenarios where AI assistance provides greatest clinical value or causes most harm through incorrect recommendations.

Healthcare applications demand reliability across the full spectrum of clinical presentations, including rare diseases and emergency scenarios that appear infrequently in training data. Synthetic data cannot generate truly novel edge cases because it operates within statistical boundaries of its training material.

Strategic Applications: When Synthetic Data Adds Value

Testing Pipelines and Development Workflows

Synthetic data is valuable when teams need to augment datasets for development. ETL pipelines, NLP models, and API integrations can be tested on synthetic clinical records that mirror real-world structures.

This enables debugging, performance testing, and system optimization without accessing sensitive patient information or waiting for IRB approvals. Teams can generate edge cases and stress-test scenarios on demand, improving software quality without privacy concerns.

Prototyping and Vendor Evaluation

Early-stage development and vendor demos benefit from augmented datasets. Multi-site outpatient groups transitioning to single EHR systems can populate test environments with synthetic records that mimic their clinical workflows. This enables rigorous sandbox testing of scheduling, billing, and clinical documentation without exposing real PHI or negotiating business associate agreements.

Data Augmentation for AI and Analytics

Synthetic data can expand limited datasets to support predictive modeling, statistical analysis, and AI development. Techniques like data augmentation and surrogate data enable teams to generate additional records without inventing information.

For example, a biostatistical model for a rare patient cohort can be trained using synthetic augmentation to improve model reliability, but only when applied carefully to avoid bias or circular learning risks.

Cross-Institutional Research and Collaboration

Synthetic data unlocks collaboration between organizations that cannot easily share real patient information. The Veterans Health Administration implemented synthetic data engines across their facilities, providing instant access to unified veteran datasets without lengthy approvals or privacy compromises.

Sheba Medical Center reported that synthetic data became "a huge game-changer in time to insight," enabling researchers to receive answers within the same day rather than waiting months, potentially doubling or tripling study pace.

Synthetic data works best as a tool for data augmentation, enabling teams to expand datasets, train models, and test workflows. Its effectiveness depends on careful application—it is not a replacement for real patient data.

When Synthetic Data Fails (or Falls Short)

High-Stakes Decisions Still Require Real Data

The closer applications get to direct patient care, the less suitable synthetic data becomes. By nature, synthetic data cannot capture every clinical nuance, and models performing well on synthetic data may fail on live data due to subtle differences.

Healthcare stakeholders show decreased trust in diagnostic outcomes derived from synthetic datasets. For clinical decision support, treatment planning, or regulatory submissions, industry observers expect a "two-step approach": synthetic data for initial development, then real-world data for final validation and deployment.

Potential Bias and Unrealistic Patterns

Synthetic data generation inherits biases from source material and can perpetuate systematic gaps unless actively corrected. If original datasets under-represent certain populations or conditions, synthetic versions amplify these limitations while creating false confidence in comprehensive coverage.

Synthetic data isn't perfect. It can still carry forward bias and may degrade in quality if excessive privacy constraints result in less useful data. Complex clinical correlations or rare events might not be captured by generative models, making synthetic data too smooth or average.

Need for Rigorous Validation

Synthetic datasets may appear statistically similar to real data while missing critical nuances. Any models developed on synthetic data require validation against real-world data before establishing trust. Without validation loops, organizations risk false confidence in tools that might fail on authentic inputs.

Maintaining feedback connections with real data proves crucial. Domain experts can often identify when synthetic patient records "don't make clinical sense" even when statistical measures appear acceptable. Independent quality benchmarks are emerging, but no universal standards exist yet.

Implementation Framework for Healthcare CTOs

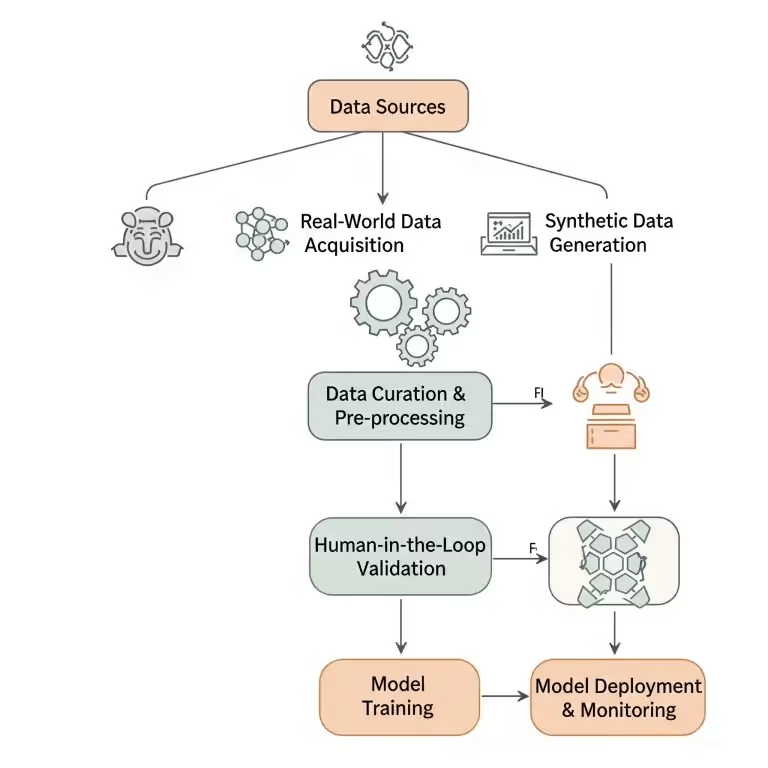

The Train-Augment-Validate Workflow

Successful synthetic data implementation follows a specific sequence: train foundational models with real patient data, augment training sets with curated synthetic data, then validate final performance against real-world benchmarks. This positions synthetic data as a supplement rather than a substitute for authentic clinical information.

True synthetic augmentation is statistically sophisticated. Data scientists apply methods like Synthetic Minority Over-sampling Technique (SMOTE), Maximum Likelihood Estimation (MLE), and other probabilistic or generative modeling techniques to ensure synthetic data reflects real-world distributions.

This is far more than “ask an AI to make patient records.” It’s a complex statistical challenge that, when done correctly, can expand datasets safely and meaningfully.

Validation is critical. Independent datasets, separate from both real and synthetic training data, reveal whether augmentation genuinely improves model performance or merely inflates dataset size without adding value.

Use Cases vs. No-Go Scenarios

Clear decision criteria help avoid dangerous applications while maximizing appropriate benefits. Use synthetic data for development workflows, proof-of-concept projects, vendor evaluation, and early prototyping where system functionality takes precedence over clinical accuracy.

Never use synthetic data as primary training sources for production clinical decision support systems, patient safety-critical applications, or final model validation. These scenarios require the full complexity and edge case representation that only real patient data provides.

Advanced Technical Safeguards

Protecting against circular training requires comprehensive data lineage tracking throughout development processes. Document the source of every training record and generation methods used for synthetic data creation. This documentation enables feedback loop detection before they compromise model reliability.

Validation frameworks should test model performance against diverse real-world datasets reflecting target patient populations. Monitor for systematic performance degradation in specific demographic groups or clinical scenarios that might indicate bias amplification from synthetic training data.

CTO Checklist: Advanced Technical Safeguards for Synthetic Data

- Track Data Lineage – Document every real and synthetic record’s source and generation method.

- Use Reproducible Notebooks – Leverage Jupyter or similar tools to log each synthetic data generation step.

- Validate Against Real-World Datasets – Test model performance on datasets reflecting target populations.

- Monitor Bias Amplification – Identify performance drops across demographics or clinical subgroups.

- Detect Feedback Loops Early – Ensure synthetic data does not inadvertently reinforce model errors.

- Review Generation Parameters Regularly – Confirm sampling methods, distributions, and augmentation techniques align with intended outcomes.

Implementation Checklist for Healthcare Organizations

Before deploying synthetic data solutions, establish technical validation requirements including bias testing, edge case evaluation, and performance comparison against real-data baselines. Document requirements clearly to guide development teams and support regulatory compliance efforts.

Compliance considerations must address data governance, privacy protection, and audit trail maintenance. Collaborate with privacy officers and legal teams to establish synthetic data policies for use, retention, and validation that align with organizational risk tolerance and regulatory requirements.

Performance monitoring should continue throughout model lifecycles, comparing synthetic-trained models against real-data performance benchmarks to detect drift and ensure continued value rather than systematic error introduction.

Final Takeaways

Synthetic data represents a transformative tool for healthcare AI development when applied within appropriate risk boundaries. It can accelerate development workflows, enable rapid prototyping, and support vendor evaluations without compromising patient privacy or triggering compliance bottlenecks.

Success requires understanding fundamental limitations and risks that synthetic data introduces. Bias amplification, circular training problems, and missing edge cases can undermine patient safety when synthetic data moves beyond development into production clinical systems.

The strategic approach positions synthetic data as a development accelerator rather than production substitute. Train with real data, augment carefully with synthetic data, and validate thoroughly with independent real-world datasets. This framework harnesses synthetic data benefits while maintaining the safety and reliability that healthcare applications demand.

Real and synthetic data will "coexist, each optimized for different use cases" – much like flight simulators enable pilot training while real flight hours remain essential for certification.

Frequently Asked Questions

Can synthetic data completely eliminate HIPAA compliance requirements?

Synthetic data reduces HIPAA exposure significantly but doesn't eliminate all compliance considerations. Organizations still need proper governance, access controls, and audit trails, especially when synthetic data is generated from real patient records.

What's the biggest risk when implementing synthetic data in healthcare AI?

The greatest risk is treating synthetic data as equivalent to real patient data for clinical decision-making. Models trained primarily on synthetic data may miss critical edge cases or exhibit biased behavior that becomes apparent only when deployed in real clinical environments.

How do leading healthcare organizations validate synthetic data quality?

Organizations like Sheba Medical Center use multi-step validation including clinical expert review, statistical comparison against real data distributions, and performance testing on independent datasets before trusting synthetic data for research or development.

When should we avoid using synthetic data entirely?

Avoid synthetic data for final regulatory submissions, production clinical decision support systems, and any application where artificial variation of data provides no benefit to improving the accuracy and reliability of a system. These scenarios require the full complexity and edge case representation that only real patient data can provide.

Alex leads Invene's engineering teams to deliver secure, compliant, and deadline-driven healthcare solutions. Previously, he successfully exited his own consulting company, where he built innovative products integrating hardware and software. He's passionate about speaking and helping business leaders understand technology so they can make better, more informed decisions. He also mentors engineers and regularly explores white papers to stay ahead of the latest technological advancements.

Ready to Tackle Your Hardest Data and Product Challenges?

We can accelerate your goals and drive measurable results.